The most severe AI-driven vulnerability uncovered to date didn’t require a sophisticated exploit chain, a novel attack technique, or a deep understanding of machine learning. It required an email address.

In October 2025, security researcher Aaron Costello of AppOmni discovered a critical flaw in ServiceNow’s AI platform — dubbed “BodySnatcher” and tracked as CVE-2025-12420 — that allowed an unauthenticated attacker to impersonate any user, including administrators, and hijack the platform’s native AI agents to perform a full takeover. The CVSS score: 9.3 out of 10. The root cause wasn’t some exotic flaw unique to artificial intelligence. It was a chain of hardcoded shared credentials, broken identity verification, and wildly over-privileged agent roles. In other words, the same fundamental access control failures the security industry has been fighting for decades.

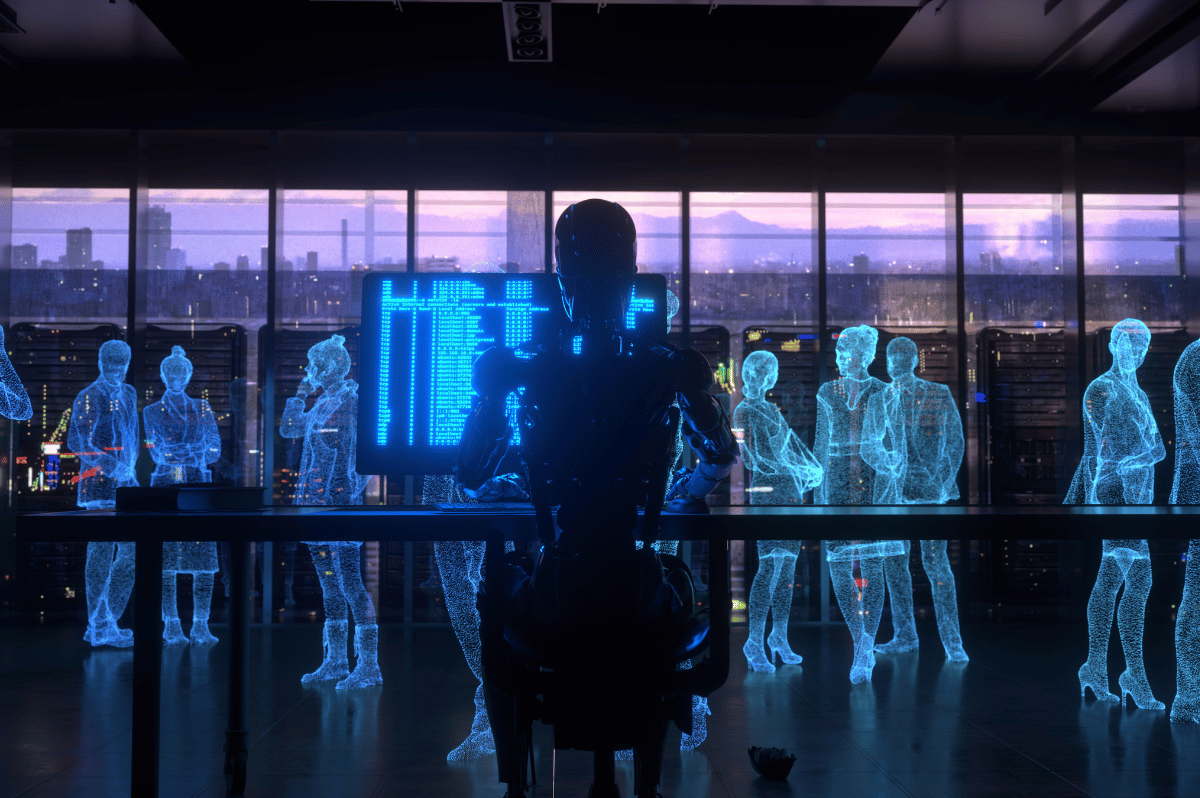

The BodySnatcher vulnerability should serve as a wake-up call — not because AI is inherently dangerous, but because too many organizations are deploying AI agents without applying the security principles they already know.

AI Agents Inherited Human-Shaped Trust Models

Here’s what happened at ServiceNow in simple terms: the company bolted agentic AI capabilities onto a legacy chatbot framework and gave those agents expansive privileges to act across the platform. One prebuilt agent had the ability to create new records anywhere in ServiceNow — including, critically, new user accounts with admin-level access. When Costello exploited the authentication chain, he didn’t just read data. He used the AI agent to create a persistent backdoor with full administrative control.

Now consider the blast radius. ServiceNow serves as the IT services management backbone for 85% of the Fortune 500. Its tentacles reach into HR, customer service, security operations, and dozens of connected systems. An attacker with admin access to ServiceNow doesn’t just own that platform — they gain a launchpad into Salesforce, Microsoft 365, and every other integrated system. The AI agent didn’t introduce the vulnerability, but it dramatically amplified its impact. Traditional bugs that might allow limited data access became full-platform compromises because the agent could autonomously chain actions — creating accounts, assigning privileges, and establishing persistence — at machine speed and without human oversight.

This is the pattern playing out across enterprise SaaS right now. Organizations are racing to deploy AI agents for operational efficiency, but they’re skipping the critical step of asking: what should this agent actually be allowed to do?

The Reframe: AI Agents Are Just Another Identity

Here’s the good news: companies don’t need to invent a brand-new security paradigm to deal with agentic AI. They need to apply the same zero trust principles they already use for users and devices.

An AI agent is a non-human identity. It authenticates to systems, requests access to resources, and performs actions on behalf of the organization. It needs to be governed by the same access control policies as any other identity in your environment — with authentication, authorization, least-privilege scoping, and continuous verification.

Think of it this way: you wouldn’t give a new contractor blanket admin access to your entire network on day one. You’d scope their permissions to the specific systems and tasks they need and review their access periodically. There’s no reason AI agents should be treated any differently. If anything, they deserve more scrutiny, because they operate at machine speed, can chain actions autonomously, and don’t pause to question whether a request seems suspicious.

AI Agent Security Starts with Access Control

The remediation guidance for the ServiceNow vulnerability reads like a standard access control checklist — and that’s exactly the point. Securing AI agents isn’t a new discipline. It’s the same discipline, applied to a new category of identity.

Enforce least privilege aggressively. Scope agent permissions to the specific tasks they perform. A helpdesk chatbot doesn’t need record-creation rights across an entire platform. If an agent’s job is answering employee questions about PTO policy, it shouldn’t have the ability to provision new admin accounts.

Authenticate agents like any other endpoint. Hardcoded, shared secrets are unacceptable for service accounts, and they’re equally unacceptable for AI agents. Enforce strong, unique credentials with regular rotation — the same hygiene you’d demand for any non-human identity.

Segment and isolate agent workflows. The ServiceNow research revealed that default settings automatically grouped agents into discoverable teams, creating unintended collaboration pathways that attackers could exploit. Don’t let agents auto-discover and interact with other agents without explicit, policy-driven controls.

Monitor agent behavior continuously. Agents operate at machine speed, which means abuse can escalate from initial access to full compromise in seconds. Behavioral monitoring and anomaly detection aren’t optional — they’re essential to catch privilege abuse before it cascades across connected systems.

Require human approval for high-risk actions. Privilege escalation, account creation, and cross-system configuration changes should never be fully autonomous. A human-in-the-loop checkpoint for sensitive operations is a simple guardrail that could have stopped BodySnatcher in its tracks.

The Framework Already Exists

The security industry has spent years building zero trust access control frameworks for users, devices, and workloads. Network access control, identity governance, least-privilege enforcement, continuous verification — these aren’t theoretical concepts. They’re mature, battle-tested practices already embedded in how forward-thinking organizations secure their environments.

AI agents are simply the newest identity in the ecosystem. The ServiceNow incident isn’t a cautionary tale about AI being unmanageable. It’s a reminder that when organizations skip the fundamentals — when they hand powerful autonomous systems the keys to the kingdom without basic access controls — any technology becomes a liability.

The enterprises best positioned to secure the age of agentic AI aren’t the ones buying point solutions purpose-built for AI risk. They’re the ones that already think in terms of identity-driven access control and are simply extending those principles to cover every entity in their environment — human or not.